from IPython.display import Image

Image('../../../python_for_probability_statistics_and_machine_learning.jpg')

from __future__ import division

%pylab inline

Linear regression gets to the heart of statistics: Given a set of data points, what is the relationship of the data in-hand to data yet seen? How should information from one data set propagate to other data? Linear regression offers the following model to address this question:

That is, given specific values for $X$, assume that the conditional expectation is a linear function of those specific values. However, because the observed values are not the expectations themselves, the model accommodates this with an additive noise term. In other words, the observed variable (a.k.a. response, target, dependent variable) is modeled as,

where $\mathbb{E}(\epsilon_i)=0$ and the $\epsilon_i$ are iid and where the distribution function of $\epsilon_i$ depends on the problem, even though it is often assumed Gaussian. The $X=x$ values are known as independent variables, covariates, or regressors.

Let's see if we can use all of the methods we have developed so far to understand this form of regression. The first task is to determine how to estimate the unknown linear parameters, $a$ and $b$. To make this concrete, let's assume that $\epsilon \sim \mathcal{N}(0,\sigma^2)$. Bear in mind that $\mathbb{E}(Y|X=x)$ is a deterministic function of $x$. In other words, the variable $x$ changes with each draw, but after the data have been collected these are no longer random quantities. Thus, for fixed $x$, $y$ is a random variable generated by $\epsilon$. Perhaps we should denote $\epsilon$ as $\epsilon_x$ to emphasize this, but because $\epsilon$ is an independent, identically-distributed (iid) random variable at each fixed $x$, this would be excessive. Because of Gaussian additive noise, the distribution of $y$ is completely characterized by its mean and variance.

Using the maximum likelihood procedure, we write out the log-likelihood function as

Note that we suppressed the terms that are irrelevent to the maximum-finding. Taking the derivative of this with respect to $a$ gives the following equation:

Likewise, we do the same for the $b$ parameter

The following code simulates some data and uses Numpy tools to compute the parameters as shown,

import numpy as np

a = 6;b = 1 # parameters to estimate

x = np.linspace(0,1,100)

y = a*x + np.random.randn(len(x))+b

p,var_=np.polyfit(x,y,1,cov=True) # fit data to line

y_ = np.polyval(p,x) # estimated by linear regression

%matplotlib inline

from matplotlib.pylab import subplots

fig,axs=subplots(1,2)

fig.set_size_inches((9,3.5))

_=ax =axs[0]

_=ax.plot(x,y,'o',alpha=.3,color='gray',ms=10)

_=ax.plot(x,y_,color='k',lw=3)

_=ax.set_xlabel("$x$",fontsize=22)

_=ax.set_ylabel("$y$",fontsize=22)

_=ax.set_title("Linear regression; a=%3.3g b=%3.3g"%(p[0],p[1]))

_=ax = axs[1]

_=ax.hist(y_-y,color='gray')

_=ax.set_xlabel(r"$\Delta y$",fontsize=22)

fig.tight_layout()

#fig.savefig('fig-statistics/Regression_001.png')

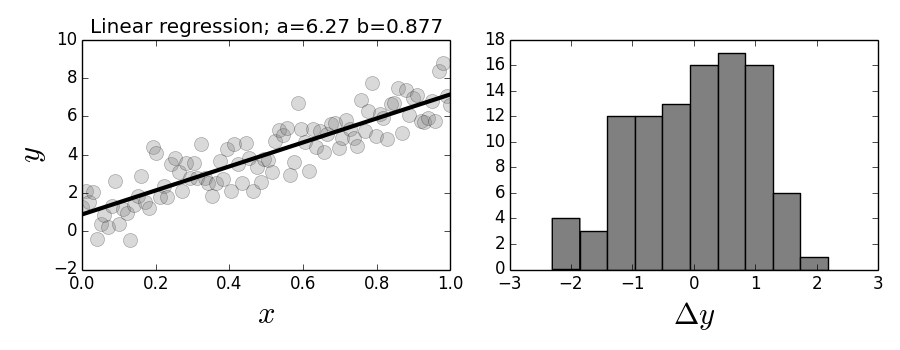

The panel on the left shows the data and regression line. The panel on the right shows a histogram of the regression errors.

The graph on the left of Figure shows the regression line plotted against the data. The estimated parameters are noted in the title. The histogram on the right of Figure shows the residual errors in the model. It is always a good idea to inspect the residuals of any regression for normality. These are the differences between the fitted line for each $x_i$ value and the corresponding $y_i$ value in the data. Note that the $x$ term does not have to be uniformly monotone.

To decouple the deterministic variation from the random variation, we can fix the index and write separate problems of the form

where $\epsilon_i \sim \mathcal{N}(0,\sigma^2)$. What could we do with just this one component of the problem? In other words, suppose we had $m$-samples of this component as in $\lbrace y_{i,k}\rbrace_{k=1}^m$. Following the usual procedure, we could obtain estimates of the mean of $y_i$ as

However, this tells us nothing about the individual parameters $a$ and $b$ because they are not separable in the terms that are computed, namely, we may have

but we still only have one equation and the two unknowns, $a$ and $b$. How about if we consider and fix another component $j$ as in

Then, we have

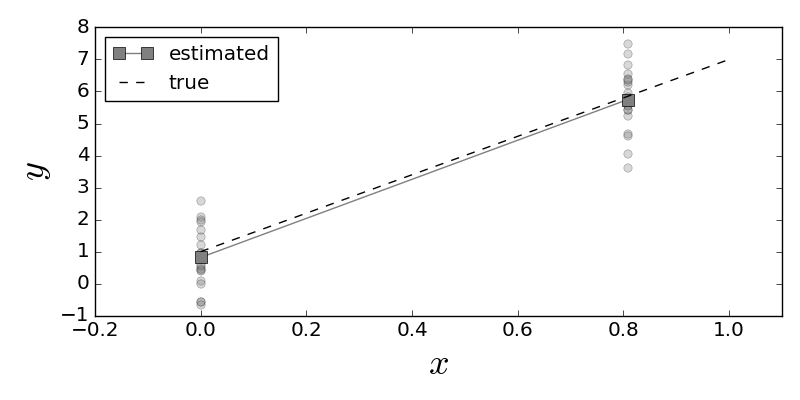

so at least now we have two equations and two unknowns and we know how to estimate the left hand sides of these equations from the data using the estimators $\hat{y_i}$ and $\hat{y_j}$. Let's see how this works in the code sample below.

x0, xn =x[0],x[80]

# generate synthetic data

y_0 = a*x0 + np.random.randn(20)+b

y_1 = a*xn + np.random.randn(20)+b

# mean along sample dimension

yhat = np.array([y_0,y_1]).mean(axis=1)

a_,b_=np.linalg.solve(np.array([[x0,1],

[xn,1]]),yhat)

The fitted and true lines are plotted with the data values. The squares at either end of the solid line show the mean value for each of the data groups shown.

Programming Tip.

The prior code uses the solve function in the Numpy linalg module, which

contains the core linear algebra codes in Numpy that incorporate the

battle-tested LAPACK library.

We can write out the solution for the estimated parameters for this case where $x_0 =0$

The expectations and variances of these estimators are the following,

The expectations show that the estimators are unbiased. The estimator $\hat{a}$ has a variance that decreases as larger points $x_i$ are selected. That is, it is better to have samples further out along the horizontal axis for fitting the line. This variance quantifies the leverage of those distant points.

Regression From Projection Methods. Let's see if we can apply our knowledge of projection methods to the general case. In vector notation, we can write the following:

where $\mathbf{1}$ is the vector of all ones. Let's use the inner-product notation,

Recall that $\mathbb{E}(\boldsymbol{\epsilon})=\mathbf{0}$. We can finally solve for $a$ as

That was pretty neat but now we have the mysterious $\mathbf{x}_1$ vector. Where does this come from? If we project $\mathbf{x}$ onto the $\mathbf{1}^\perp$, then we get the MMSE approximation to $\mathbf{x}$ in the $\mathbf{1}^\perp$ space. Thus, we take

Remember that $P_{\mathbf{1}^\perp} $ is a projection matrix so the length of $\mathbf{x}_1$ is at most $\mathbf{x}$. This means that the denominator in the $\hat{a}$ equation above is really just the length of the $\mathbf{x}$ vector in the coordinate system of $P_{\mathbf{1}^\perp} $. Because the projection is orthogonal (namely, of minimum length), the Pythagorean theorem gives this length as the following:

The first term on the right is the length of the $\mathbf{x}$ vector and last term is the length of $\mathbf{x}$ in the coordinate system orthogonal to $P_{\mathbf{1}^\perp} $, namely that of $\mathbf{1}$. We can use this geometric interpretation to understand what is going on in typical linear regression in much more detail. The fact that the denominator is the orthogonal projection of $\mathbf{x}$ tells us that the choice of $\mathbf{x}_1$ has the strongest effect (i.e., largest value) on reducing the variance of $\hat{a}$. That is, the more $\mathbf{x}$ is aligned with $\mathbf{1}$, the worse the variance of $\hat{a}$. This makes intuitive sense because the closer $\mathbf{x}$ is to $\mathbf{1}$, the more constant it is, and we have already seen from our one-dimensional example that distance between the $x$ terms pays off in reduced variance. We already know that $\hat{a}$ is an unbiased estimator and because we chose $\mathbf{x}_1$ deliberately as a projection, we know that it is also of minimum variance. Such estimators are known as Minimum-Variance Unbiased Estimators (MVUE).

In the same spirit, let's examine the numerator of $\hat{a}$ in Equation ref{eq:ahat}. We can write $\mathbf{x}_{1}$ as the following

where $P_{\mathbf{1}}$ is projection matrix of $\mathbf{x}$ onto the $\mathbf{1}$ vector. Using this, the numerator of $\hat{a}$ becomes

Note that,

so that writing this out explicitly gives

and similarly, we have the following for the denominator:

So, plugging all of this together gives the following,

with corresponding variance,

Using the same approach with $\hat{b}$ gives,

where

with variance

Qualifying the Estimates. Our formulas for the variance above include the unknown $\sigma^2$, which we must estimate from the data itself using our plug-in estimates. We can form the residual sum of squares as

Thus, the estimate of $\sigma^2$ can be expressed as

where $n$ is the number of samples. This is also known as the

residual mean square. The $n-2$ represents the degrees of freedom (df).

Because we estimated two parameters from the same data we have $n-2$ instead of

$n$. Thus, in general, $\texttt{df} = n - p$, where $p$ is the number of

estimated parameters. Under the assumption that the noise is Gaussian, the

$\texttt{RSS}/\sigma^2$ is chi-squared distributed with $n-2$ degrees of

freedom. Another important term is the sum of squares about the mean, (a.k.a

corrected sum of squares),

The $\texttt{SYY}$ captures the idea of not using the $x_i$ data and just using the mean of the $y_i$ data to estimate $y$. These two terms lead to the $R^2$ term,

Note that for perfect regression, $R^2=1$. That is, if the

regression gets each $y_i$ data point exactly right, then

$\texttt{RSS}=0$ this term equals one. Thus, this term is used to

measure of goodness-of-fit. The stats module in scipy computes

many of these terms automatically,

from scipy import stats

slope,intercept,r_value,p_value,stderr = stats.linregress(x,y)

where the square of the r_value variable is the $R^2$ above. The

computed p-value is the two-sided hypothesis test with a null hypothesis that

the slope of the line is zero. In other words, this tests whether or not the

linear regression makes sense for the data for that hypothesis. The

Statsmodels module provides a powerful extension to Scipy's stats module by

making it easy to do regression and keep track of these parameters. Let's

reformulate our problem using the Statsmodels framework by creating

a Pandas dataframe for the data,

import statsmodels.formula.api as smf

from pandas import DataFrame

import numpy as np

d = DataFrame({'x':np.linspace(0,1,10)}) # create data

d['y'] = a*d.x+ b + np.random.randn(*d.x.shape)

Now that we have the input data in the above Pandas dataframe, we can perform the regression as in the following,

results = smf.ols('y ~ x', data=d).fit()

The $\sim$ symbol is notation for $y = a x + b + \epsilon$, where the constant $b$ is implicit in this usage of Statsmodels. The names in the string are taken from the columns in the dataframe. This makes it very easy to build models with complicated interactions between the named columns in the dataframe. We can examine a report of the model fit by looking at the summary,

print results.summary2()

There is a lot more here than we have discussed so far, but the Statsmodels documentation is the best place to go for complete information about this report. The F-statistic attempts to capture the contrast between including the slope parameter or leaving it off. That is, consider two hypotheses:

In order to quantify how much better adding the slope term is for the regression, we compute the following:

The numerator computes the difference in the residual squared errors between including the slope in the regression or just using the mean of the $y_i$ values. Once again, if we assume (or can claim asymptotically) that the $\epsilon$ noise term is Gaussian, $\epsilon \sim \mathcal{N}(0,\sigma^2)$, then the $H_0$ hypothesis will follow an F-distribution 1 with degrees of freedom from the numerator and denominator. In this case, $F \sim F(1,n-2)$. The value of this statistic is reported by Statsmodels above. The corresponding reported probability shows the chance of $F$ exceeding its computed value if $H_0$ were true. So, the take-home message from all this is that including the slope leads to a much smaller reduction in squared error than could be expected from a favorable draw of $n$ points of this data, under the Gaussian additive noise assumption. This is evidence that including the slope is meaningful for this data.

and $n$.

The Statsmodels report also shows the adjusted $R^2$ term. This is a correction to the $R^2$ calculation that accounts for the number of parameters $p$ that the regression is fitting and the sample size $n$,

The $F(m,n)$ F-distribution has two integer degree-of-freedom parameters, $m$↩

This is always lower than $R^2$ except when $p=1$ (i.e., estimating only $b$). This becomes a better way to compare regressions when one is attempting to fit many parameters with comparatively small $n$.

Linear Prediction. Using linear regression for prediction introduces some other issues. Recall the following expectation,

where we have determined $\hat{a}$ and $\hat{b}$ from the data. Given a new point of interest, $x_p$, we would certainly compute

as the predicted value for $\hat{ y_p }$. This is the same as saying that our best prediction for $y$ based on $x_p$ is the above conditional expectation. The variance for this is the following,

Note that we have the covariance above because $\hat{a}$ and $\hat{b}$ are derived from the same data. We can work this out below using our previous notation from ref{eq:ahat},

After plugging all this in, we obtain the following,

where, in practice, we use the plug-in estimate for the $\sigma^2$.

There is an important consequence for the confidence interval for $y_p$. We cannot simply use the square root of $\mathbb{V}(y_p)$ to form the confidence interval because the model includes the extra $\epsilon$ noise term. In particular, the parameters were computed using a set of statistics from the data, but now must include different realizations for the noise term for the prediction part. This means we have to compute

Then, the 95\% confidence interval $y_p \in (y_p-2\hat{\eta},y_p+2\hat{\eta})$ is the following,

where $\hat{\eta}$ comes from substituting the plug-in estimate for $\sigma$.

Extensions to Multiple Covariates¶

With all the machinery we have, it is a short notational hop to consider multiple regressors as in the following,

with the usual $\mathbb{E}(\boldsymbol{\epsilon})=\mathbf{0}$ and $\mathbb{V}(\boldsymbol{\epsilon})=\sigma^2\mathbf{I}$. Thus, $\mathbf{X}$ is a $n \times p$ full rank matrix of regressors and $\mathbf{Y}$ is the $n$-vector of observations. Note that the constant term has been incorporated into $\mathbf{X}$ as a column of ones. The corresponding estimated solution for $\boldsymbol{\beta}$ is the following,

with corresponding variance,

and with the assumption of Gaussian errors, we have

The unbiased estimate of $\sigma^2$ is the following,

where $\hat{ \boldsymbol{\epsilon}}=\mathbf{X}\hat{\boldsymbol{\beta}} -\mathbf{Y}$ is the vector of residuals. Tukey christened the following matrix as the hat matrix (a.k.a. influence matrix),

because it maps $\mathbf{Y}$ into $\hat{ \mathbf{Y} }$,

As an exercise you can check that $\mathbf{V}$ is a projection matrix. Note that that matrix is solely a function of $\mathbf{X}$. The diagonal elements of $\mathbf{V}$ are called the leverage values and are contained in the closed interval $[1/n,1]$. These terms measure of distance between the values of $x_i$ and the mean values over the $n$ observations. Thus, the leverage terms depend only on $\mathbf{X}$. This is the generalization of our initial discussion of leverage where we had multiple samples at only two $x_i$ points. Using the hat matrix, we can compute the variance of each residual, $e_i = \hat{y}-y_i$ as

where $v_i=V_{i,i}$. Given the above-mentioned bounds on $v_{i}$, these are always less than $\sigma^2$.

Degeneracy in the columns of $\mathbf{X}$ can become a problem. This is when two or more of the columns become co-linear. We have already seen this with our single regressor example wherein $\mathbf{x}$ close to $\mathbf{1}$ was bad news. To compensate for this effect we can load the diagonal elements and solve for the unknown parameters as in the following,

where $\alpha>0$ is a tunable hyper-parameter. This method is known as ridge regression and was proposed in 1970 by Hoerl and Kenndard. It can be shown that this is the equivalent to minimizing the following objective,

In other words, the length of the estimated $\boldsymbol{\beta}$ is penalized with larger $\alpha$. This has the effect of stabilizing the subsequent inverse calculation and also providing a means to trade bias and variance, which we will discuss at length in the section ref{ch:ml:sec:regularization}

Interpreting Residuals. Our model assumes an additive Gaussian noise term. We can check the voracity of this assumption by examining the residuals after fitting. The residuals are the difference between the fitted values and the original data

While the p-value and the F-ratio provide some indication of whether or not computing the slope of the regression makes sense, we can get directly at the key assumption of additive Gaussian noise.

For sufficiently small dimensions, the scipy.stats.probplot we discussed in

the last chapter provides quick visual evidence one way or another by plotting

the standardized residuals,

The other part of the iid assumption implies homoscedasticity (all $r_i$ have equal variances). Under the additive Gaussian noise assumption, the $e_i$ should also be distributed according to $\mathcal{N}(0,\sigma^2(1-v_i))$. The normalized residuals $r_i$ should then be distributed according to $\mathcal{N}(0,1)$. Thus, the presence of any $r_i \notin [-1.96,1.96]$ should not be common at the 5% significance level and is thereby breeds suspicion regarding the homoscedasticity assumption.

The Levene test in scipy.stats.leven tests the null hypothesis that all the

variances are equal. This basically checks whether or not the standardized

residuals vary across $x_i$ more than expected. Under the homoscedasticity

assumption, the variance should be independent of $x_i$. If not, then this is a

clue that there is a missing variable in the analysis or that the variables

themselves should be transformed (e.g., using the $\log$ function) into another

format that can reduce this effect. Also, we can use weighted least-squares

instead of ordinary least-squares.

Variable Scaling. It is tempting to conclude in a multiple regression that small coefficients in any of the $\boldsymbol{\beta}$ terms implies that those terms are not important. However, simple unit conversions can cause this effect. For example, if one of the regressors is in units of kilometers and the others are in meters, then just the scale factor can give the impression of outsized or under-sized effects. The common way to account for this is to scale the regressors so that

This has the side effect of converting the slope parameters into correlation coefficients, which is bounded by $\pm 1$.

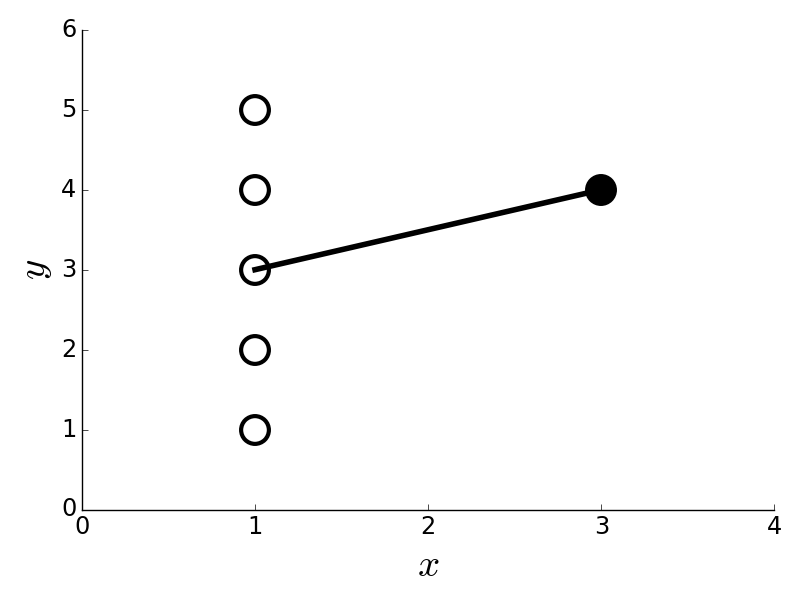

Influential Data. We have already discussed the idea of leverage. The concept of influence combines leverage with outliers. To understand influence, consider Figure.

The point on the right has outsized influence in this data because it is the only one used to determine the slope of the fitted line.

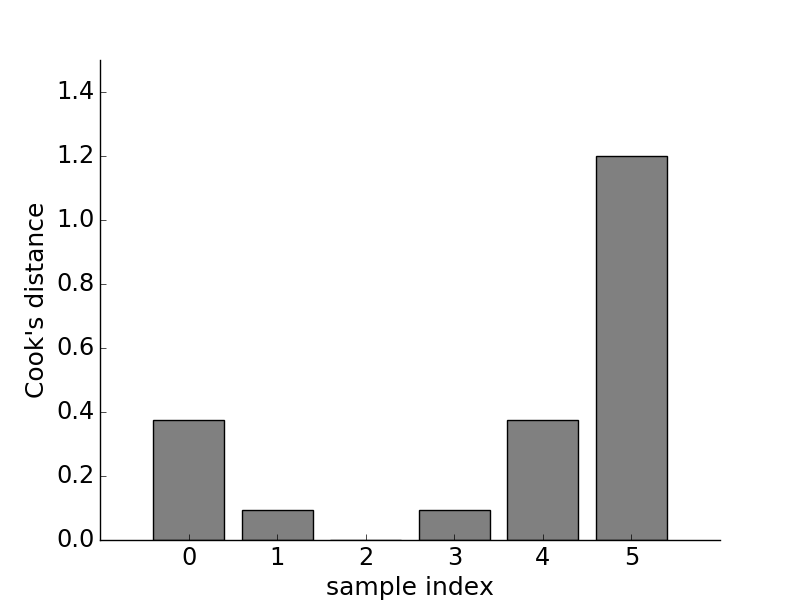

The point on the right in Figure is the only one that is contributing to the calculation of the slope for the fitted line. Thus, it is very influential in this sense. Cook's distance is a good way to get at this concept numerically. To compute this, we have to compute the $j^{th}$ component of the estimated target variable with the $i^{th}$ point deleted. We call this $\hat{y}_{j(i)}$. Then, we compute the following,

where, as before, $p$ is the number of estimated terms (e.g., $p=2$

in the bivariate case). This calculation emphasizes the effect of the outlier

by predicting the target variable with and without each point. In the case of

Figure, losing any of the points on the left cannot

change the estimated target variable much, but losing the single point on the

right surely does. The point on the right does not seem to be an outlier (it

is on the fitted line), but this is because it is influential enough to

rotate the line to align with it. Cook's distance helps capture this effect by

leaving each sample out and re-fitting the remainder as shown in the last

equation. Figure shows the calculated Cook's

distance for the data in Figure, showing that

the data point on the right (sample index 5) has outsized influence on the fitted line. As a rule

of thumb, Cook's distance values greater than one are suspect.

The calculated Cook's distance for the data in [Figure](#fig:Regression_005).

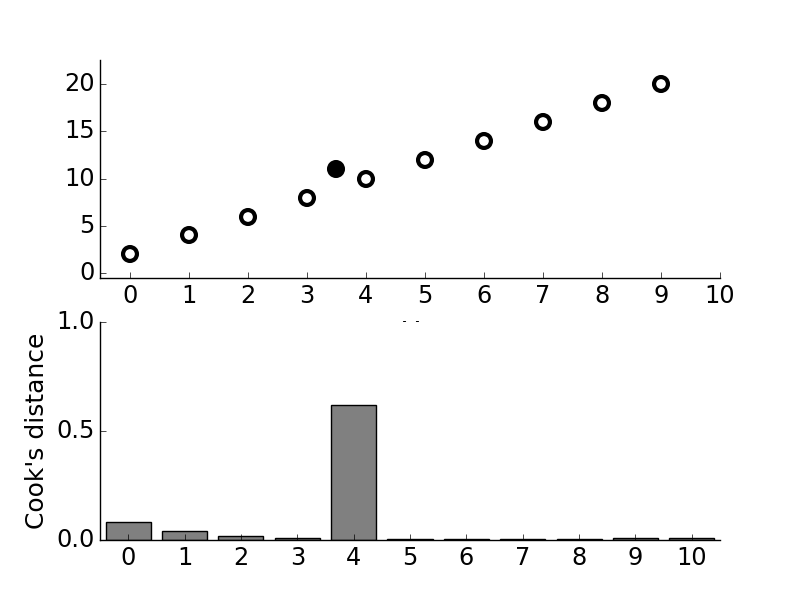

As another illustration of influence, consider Figure which shows some data that nicely line up, but with one outlier (filled black circle) in the upper panel. The lower panel shows so-computed Cook's distance for this data. As shown Cook's distance emphasizes the presence of the outlier. Because the calculation involves leaving a single sample out and re-calculating the rest, it can be a time-consuming operation suitable to relatively small data sets. There is always the temptation to downplay the importance of outliers because they conflict with a favored model, but outliers must be carefully examined to understand why the model is unable to capture them. It could be something as simple as faulty data collection, or it could be an indication of deeper issues that have been overlooked. The following code shows how Cook's distance was compute for Figure and Figure.

fit = lambda i,x,y: np.polyval(np.polyfit(x,y,1),i)

omit = lambda i,x: ([k for j,k in enumerate(x) if j !=i])

def cook_d(k):

num = sum((fit(j,omit(k,x),omit(k,y))- fit(j,x,y))**2 for j in x)

den = sum((y-np.polyval(np.polyfit(x,y,1),x))**2/len(x)*2)

return num/den

Programming Tip.

The function omit sweeps through the data and excludes

the $i^{th}$ data element. The embedded enumerate function

associates every element in the iterable with its corresponding

index.

The upper panel shows data that fit on a line and an outlier point (filled black circle). The lower panel shows the calculated Cook's distance for the data in upper panel and shows that the tenth point (i.e., the outlier) has disproportionate influence.